AI and Machine Learning: Navigating the Present and Charting a Course for the Future

These days, it’s hard to go about your day without encountering some form of artificial intelligence (AI) or machine learning (ML). AI and ML have already revolutionized our experience with technology, from targeted ads to conversational interfaces. Nonetheless, the current rate of growth and progress in these disciplines is only the beginning. Generative AI, blockchain, VR, and the metaverse are emerging technologies that make the future of AI and ML even brighter.

Integrating AI and ML will expedite the creation of technologies that can significantly alter how we live and work. In this article, we will discuss the current state of artificial intelligence and machine learning and look at how these technologies will influence the world in the future.

Understanding the difference between AI and machine learning

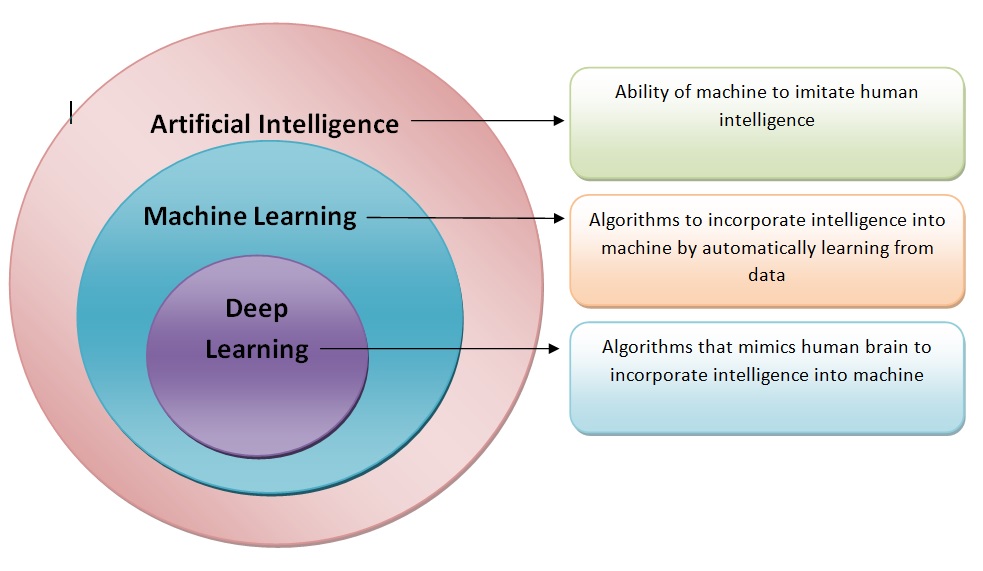

The terms artificial intelligence and machine learning are sometimes used interchangeably. However, there are distinctions between the two. AI is a vast field with many methods for allowing computers to do tasks often associated with human intelligence. Machine learning, on the other hand, is a subset of AI that involves training algorithms to learn from data without explicit programming.

Machine learning is typically used to solve specific problems, whereas AI is mainly concerned with creating systems that can perform multiple tasks. It’s worth noting that alternative approaches to machine learning, such as deep learning, can be utilized to handle complicated problems. Design decisions are sometimes made to promote interpretability and assessment, which may result in more specific machine learning approaches rather than broad AI methods. While AI and machine learning are similar, they serve different functions and have diverse applications.

Using AI to Solve Problems: How Pachama Uses AI to Improve Conservation and Reforestation Projects

Companies are using AI to solve a range of problems. Pachama, a conservation technology company, uses AI to achieve three main goals: improving the quality of conservation and reforestation projects, building tools to scale their processes, and conducting research and policy analysis. Pachama uses enormous amounts of data from remote sensing, Lidar, and field plots to evaluate and quantify the success of their conservation efforts to improve the quality of their programmes. They also employ AI to create tools that help speed up manual procedures like data labelling. AI can perform research and analysis better to understand policy changes’ effects on their conservation efforts. Pachama hopes to make conservation and reforestation more efficient, scalable, and effective by utilizing AI in these ways.

Machine learning can process enormous volumes of data and distinguish between signals and noise. Even when using the same public datasets for machine learning, businesses can differentiate themselves by successfully training their models to reveal important insights. A simple methodology for gathering data, a solid infrastructure for processing that data, and a dependable method for testing the correctness of their models are all critical.

Pachama has invested in engineering efforts to mature the infrastructure around data processing. They have developed a method to measure how good their models are, which is not available in some other groups. Additionally, Pachama has a defined process to acquire LIDAR and field plot data, which can be logistically challenging for other companies to spin up. These factors allow Pachama to choose the best data sources for their problem and iterate reasonably quickly.

Building a Global Map of Carbon Stocks with AI and Remote Sensing Data

Building a global map of carbon stocks is critical for understanding and mitigating climate change at Pachama. However, the lack of data availability is a bottleneck for achieving accuracy. Remote sensing data such as LIDAR, radar, and AI algorithms can process these datasets. But, the question is whether the lack of data availability limits the AI’s impact or the availability of the correct dataset.

While discussing artificial intelligence (AI), it is critical to understand the various methodologies available, as each is targeted to particular problem areas. For example, while specific models, such as Chat GPT, are trained to be generic, they may not perform well in highly specialized domains such as medicine. As a result, it is critical to train AI models to be more accurate and responsive to specific problem statements. Yet, one of the major hurdles in training AI models is appropriately classifying data. In some circumstances, no ground truth may be available, necessitating novel data collection methods, such as airborne LIDAR or labelling data.

Furthermore, while global databases are helpful, they may not be sufficient sometimes, necessitating additional data collection. The goal is that by gathering enough data and effectively expressing it, AI models can extrapolate to other domains and become more generalist. The amount of data required varies depending on the complexity of the problem area, with immensely complicated patterns necessitating more data to train the AI models.

Data availability is a significant bottleneck in achieving accuracy in building a global map of carbon stocks. Collecting data is necessary to validate the project’s progress, and remote sensing data and AI algorithms can process these datasets. Ultimately, the hope is that with enough data, AI algorithms can extrapolate to new areas and create a global map of carbon stocks.

Unlocking the Potential of AI: Realizing its Impact on the World

AI has been touted as the technology that will change the world, and while it has been making waves in specific industries for the average person, the promise of AI has not entirely been delivered. Conversations about ethics and the potential impact of AI have been happening in the media and at conferences. Still, for most people, the practical application of AI has been limited. That is until the emergence of Chat GPT. Chat GPT has made it clear to people what AI can do and how it can make a difference in their daily lives. Its impact on the world has been significant, and the use cases for AI are growing by the day.

Chat GPT has shown that AI can improve customer service, automate tasks, and create content. It has opened up a world of possibilities for businesses and individuals alike, and it’s only the beginning. It is becoming more apparent that AI has the potential to change the world in ways we can’t even imagine yet.

The Importance of Policies and Interventions in AI and ML Applications

AI and ML are powerful tools that can transform many industries, including climate change mitigation. But, just like any other tool, their effectiveness is determined by how they are deployed and regulated. AI and ML might exacerbate existing issues and generate new ones without appropriate rules and measures.

The use of carbon credits is one example of this. While helpful in decreasing greenhouse gas emissions, carbon credits are not a panacea. In some circumstances, employing carbon credits might result in “offsetting,” in which businesses continue to generate enormous volumes of greenhouse gases while just purchasing credits to offset their emissions. This gives firms a false sense of progress and allows them to continue their unsustainable ways.

AI and machine learning can assist in addressing this issue by boosting the accuracy of carbon monitoring and verification. However, even the most accurate monitoring systems can be manipulated without suitable policies and laws. For instance, a corporation could use artificial intelligence to fabricate bogus data to claim carbon credits for emissions reductions that did not occur. As a result, standards and regulations are required to protect the carbon credit system’s integrity and prevent exploitation.

Another example of the need for AI and ML policies and interventions is bias. AI and machine learning algorithms are only as accurate as the data on which they are trained. If the training data contains biases, the algorithm’s output will reflect these biases. This has the potential to maintain and even intensify existing societal tendencies.

Regulations and initiatives are required to address this problem and ensure that training data is diverse and unbiased. Furthermore, the algorithms must be transparent and accountable to guarantee that bias is not perpetuated.

Why We Should Be Excited About Generative AI

Generative AI refers to techniques enabling computers to generate material such as images, text, or sound. One of the most popular generative AI tools is Chat GPT, a conversational AI model developed by OpenAI. Generative AI is fascinating because it can transform how humans interact with machines.

AI has been quietly shifting the order of advertising and search ranks for a long time. However, generative AI, such as Chat GPT, is unique because it can generate new material. This is fascinating because it can open up a new world of creative outlets and training data sources.

OpenAI has managed to launch Chat GPT in somewhat isolation, without the extensive heavy requirements of search or other complex systems. They have also found a way to interface with incredible new technology to start the conversation, which is exciting. However, generative AI raises concerns about where it gets used next. There is a lot of talk about responsible AI now and what happens when these models hallucinate or give wrong answers.

Despite the challenges, generative AI has a lot of potential uses, including in the forest carbon space. For example, it could augment training data, a fundamental machine learning component. While there may not be many use cases for generative AI in the carbon markets, it is an area worth exploring.

The Promise of Generative AI for the Future

Generative AI looks set to be a significant part of our future. For a company like Pachama, it can build accurate global maps of carbon stocks by absorbing terabytes of remote sensing data and merging them with LIDAR and radar data. While a shortage of data is a bottleneck, AI can also generate its data, making it a valuable tool for researchers.

Furthermore, as technology progresses and more data is fed into the algorithm, it may begin to generalize and extrapolate to new areas, giving it a tantalizing promise for future developments. The power of generative AI rests in its capacity to generate new data and evaluate and interpret complicated patterns in data at breakneck speed. As a result, it has the potential to address some of the most challenging problems in a variety of industries, ranging from healthcare to banking to transportation, and it has the potential to alter how we live and work.